About the project

The testing, reporting and prototyping for the website Sydney Open was the final project of the Professional Certificate in Web Accessibility course by the University of South Australia. As part of a team of three, I was in charge of the testing part of the project.

Open Sydney / University of South Australia

November 2019

Goal & Deliverables

Our brief was to evaluate Sydney Open website accessibility, benchmark it to a competitor, and provide a report as well as a low resolution prototype addressing the accessibility issues.

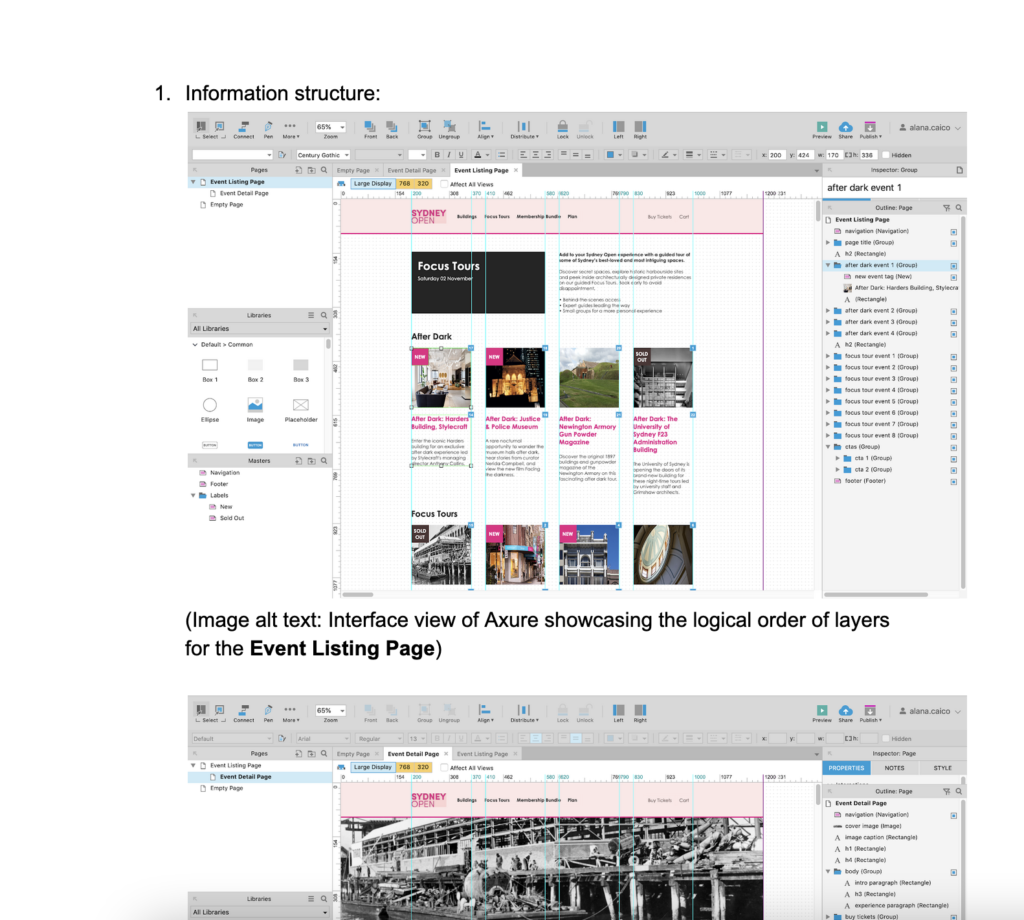

Our deliverables consisted in a report on the accessibility of the website which highlighted the main issues and where the website was doing well and provided recommendations for improvement. We added an annexe document with details of each issues and a working low resolution prototype.

Preparation

Before starting the tests, we discussed as a group the scope, baseline, conformity target and potential tools for automated testing. We familiarized ourselves with the Website Accessibility Conformance Evaluation Methodology (WCAG-EM) 1.0 which helped us defining a list of tasks we could achieve during the time given for the project.

We used Trello to list and track the progress of each tasks, Slack as our communication tool, and Google Docs.

Once agreed, I started the testing part of the project. To do so, I used a mix of automatic and manual testing. For the automated testing, I selected tools following the advice from the lecturers and after looking at the Web Accessibility Evaluation Tools List.

- Wave was used to check pages against WCAG guidelines

- Powermapper was used to check pages against WCAG guidelines and usability guidelines

- The W3C validator was used to check markup of the pages

- TS Bookmarklet was used to check line height changes on the pages

PowerMapper and WAVE provided a lot of information. One of the challenges was to identify false positives, check some points that may have been obscure and remove the irrelevant feedback.

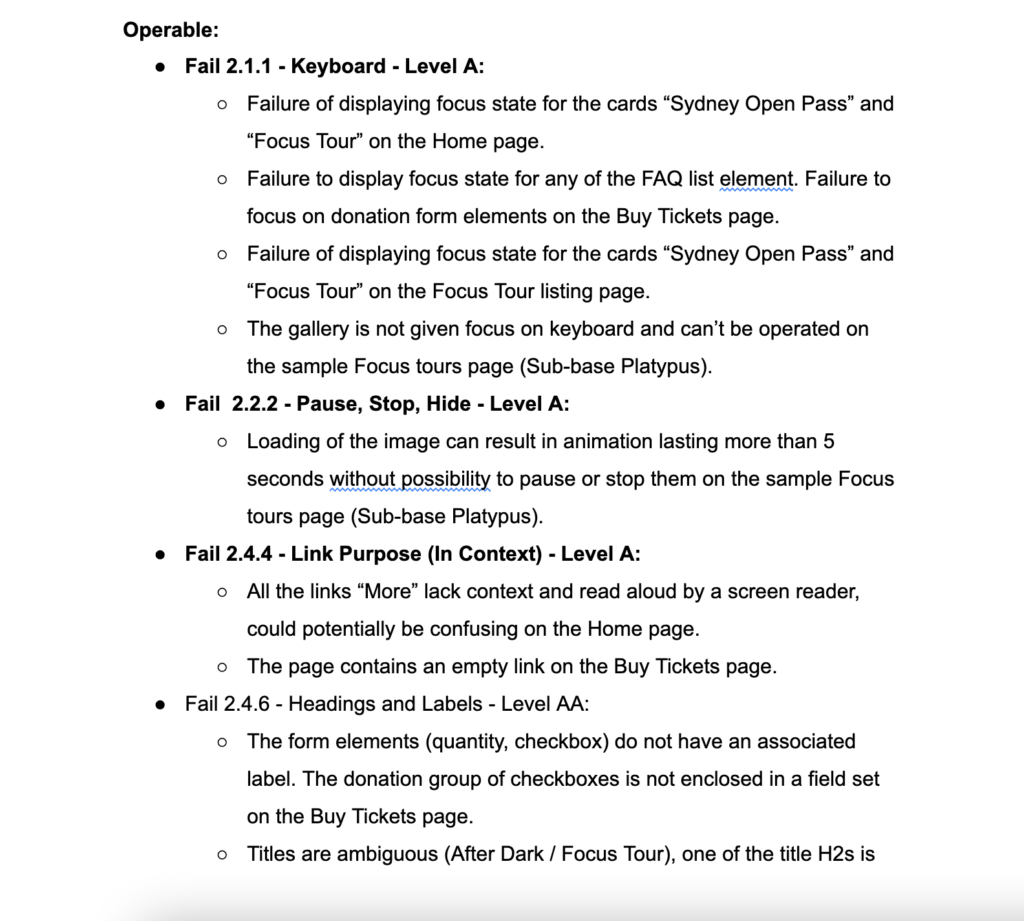

For the manual testing, I used the WCAG guidelines, broken into the POUR (Perceivable, Operable, Understandable and Robust) structure. I went through the sample pages selected in the first phase, and checked each A and AA criteria one by one. This task was the most time consuming one. One of the difficulties was to understand the failures as defined by the WCAG. When I was blocked or very unsure, we discussed these points during our catch up as a group to find a solution and move forward.

Overall, the website tested very well on some points (e.g. almost all images had a relevant alternative text, most of the text contrast ratio were meeting the guidelines) and failed on others (e.g. relationship between form elements and labels). As the testing was going on, my teammate was able to start the final report and write recommendations, while my second teammate started the prototype addressing the issues that were discovered.

Conclusion

This project and the overall work involved in the Certificate was a really good introduction to web accessibility. It allowed me to learn and apply auditing techniques to real life websites and interfaces.

Working as a team to discover accessibility issues, to then feed in the creation of the report and to suggestions for improvements on the prototype was a great experience.